第一段

This year marks exactly two countries since the publication of Frankenstein; or, The Modern Prometheus, by Mary Shelley. Even before the invention of the electric light bulb, the author produced a remarkable work of speculative fiction that would foreshadow many ethical questions to be raised by technologies yet to come.

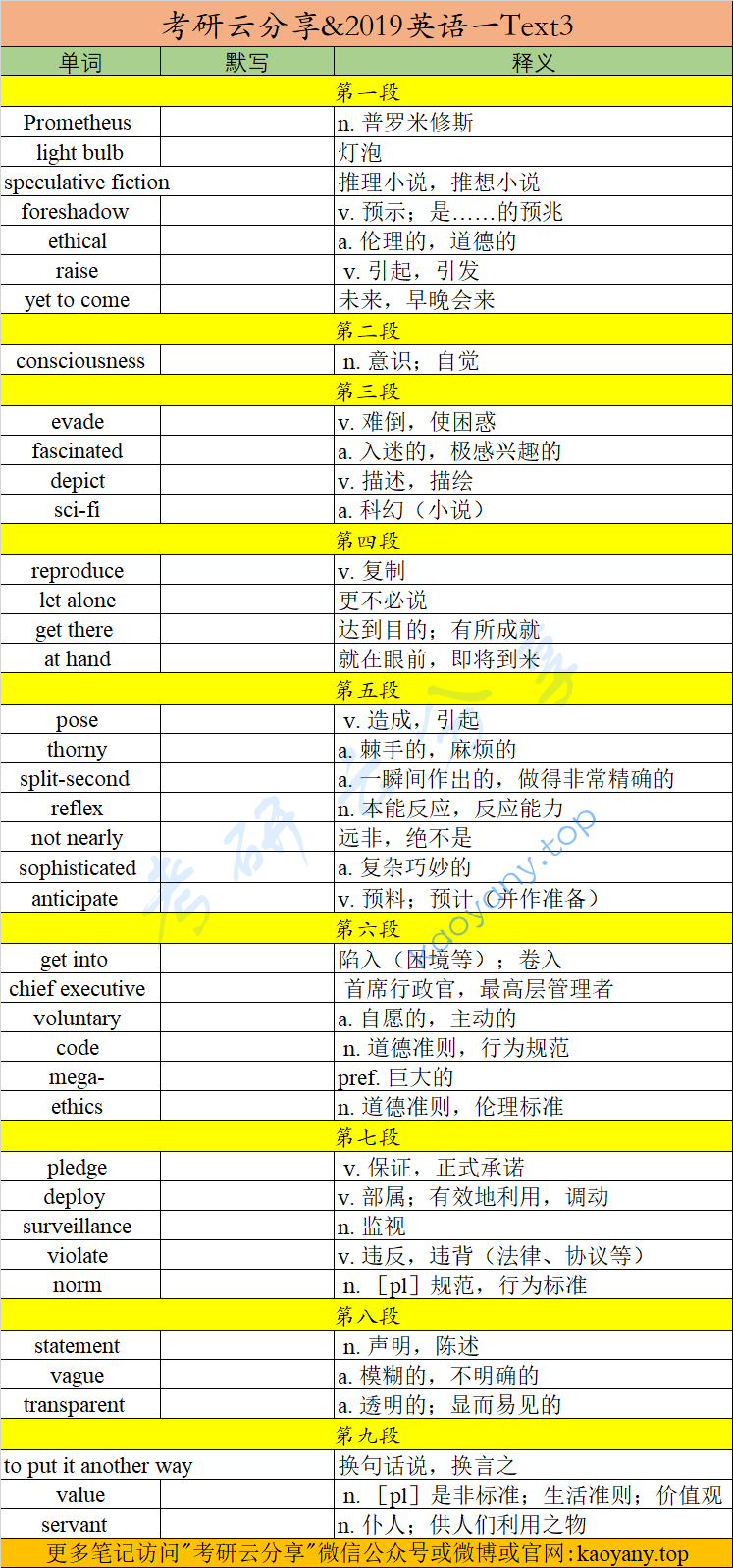

单词&词组

Prometheus n. 普罗米修斯(希腊神话中的人名,意为“先见之明”,在神话中是人类的创造者)

light bulb 灯泡

speculative fiction 推理小说,推想小说

foreshadow v. 预示;是……的预兆

ethical a. 伦理的,道德的

raise v. 引起,引发

yet to come 未来,早晚会来

本段翻译

今年正好有两个国家出版了《科学怪人》或玛丽·雪莱的《现代普罗米修斯》。甚至在电灯泡发明之前,作者就创作了一部出色的推测性小说,预示着未来科技将提出许多伦理问题。

第二段

Today the rapid growth of artificial intelligence (AI) raises fundamental questions:”What is intelligence, identify, or consciousness? What makes humans humans?”

单词&词组

consciousness n. 意识;自觉

本段翻译

今天,人工智能(AI)的迅速发展提出了一些基本问题:“什么是智能、识别或意识?是什么造就了人类?”

第三段

What is being called artificial general intelligence, machines that would imitate the way humans think, continues to evade scientists. Yet humans remain fascinated by the idea of robots that would look, move, and respond like humans, similar to those recently depicted on popular sci-fi TV series such as “Westworld” and “Humans”.

单词&词组

evade v. 难倒,使困惑

fascinated a. 入迷的,极感兴趣的

depict v. 描述,描绘

sci-fi a. 科幻(小说)

本段翻译

所谓的人工通用智能,即模仿人类思维方式的机器,仍在回避科学家。然而,人类仍然对机器人的想法着迷,机器人的外观、动作和反应都像人类,与最近流行的科幻电视连续剧《西世界》和《人类》中描述的机器人相似。

第四段

Just how people think is still far too complex to be understood, let alone reproduced, says David Eagleman, a Stanford University neuroscientist. “We are just in a situation where there are no good theories explaining what consciousness actually is and how you could ever build a machine to get there.”

单词&词组

reproduce v. 复制

let alone 更不必说

get there 达到目的;有所成就

at hand 就在眼前,即将到来

本段翻译

斯坦福大学神经科学家大卫伊格尔曼说,人们的思维方式仍然太复杂,无法理解,更不用说复制了。“我们正处于这样一种情况,没有好的理论来解释意识到底是什么,以及你怎样才能制造出一台机器来达到这个目的。”

第五段

But that doesn’t mean crucial ethical issues involving AI aren’t at hand. The coming use of autonomous vehicles, for example, poses thorny ethical questions. Human drivers sometimes must make split-second decisions. Their reactions may be a complex combination of instant reflexes, input from past driving experiences, and what their eyes and ears tell them in that moment. AI “vision” today is not nearly as sophisticated as that of humans. And to anticipate every imaginable driving situation is a difficult programming problem.

单词&词组

pose v. 造成,引起

thorny a. 棘手的,麻烦的

split-second a. 一瞬间作出的,做得非常精确的

reflex n. 本能反应,反应能力

not nearly 远非,绝不是

sophisticated a. 复杂巧妙的

anticipate v. 预料;预计(并作准备)

本段翻译

但这并不意味着涉及人工智能的关键伦理问题就不在眼前。例如,未来自动驾驶汽车的使用就提出了棘手的伦理问题。人类司机有时必须在瞬间做出决定。他们的反应可能是瞬间反应的复杂组合,来自过去驾驶经验的输入,以及他们的眼睛和耳朵在那一刻告诉他们的。今天的人工智能“视觉”并不像人类那么复杂。预测每一个可以想象的驾驶情况是一个很难编程的问题。

第六段

Whenever decisions are based on masses of data, “you quickly get into a lot of ethical questions,” notes Tan Kiat How, chief executive of a Singapore-based agency that is helping the government develop a voluntary code for the ethical use of AI. Along with Singapore, other governments and mega-corporations are beginning to establish their own guidelines. Britain is setting up a data ethics center. India released its AI ethics strategy this spring.

单词&词组

get into 陷入(困境等);卷入

chief executive 首席行政官,最高层管理者

voluntary a. 自愿的,主动的

code n. 道德准则,行为规范

mega- pref. 巨大的

ethics n. 道德准则,伦理标准

本段翻译

新加坡一家机构的首席执行官谭起豪(Tan Kiat-How)指出,无论何时决策都是基于大量数据,“你很快就会陷入很多道德问题中”,该机构正在帮助政府制定一套自愿的人工智能使用规范。与新加坡一样,其他政府和大型企业也开始制定自己的指导方针。英国正在建立一个数据伦理中心。印度今年春天发布了人工智能伦理战略。

第七段

On June 7 Google pledged not to “design or deploy AI” that would cause “overall harm,” or to develop AI-directed weapons or use AI for surveillance that would violate international norms. It also pledged not to deploy AI whose use would violate international laws or human rights.

单词&词组

pledge v. 保证,正式承诺

deploy v. 部属;有效地利用,调动

surveillance n. 监视

violate v. 违反,违背(法律、协议等)

norm n. [pl]规范,行为标准

本段翻译

6月7日,谷歌承诺不会“设计或部署”会造成“整体伤害”的人工智能,也不会研发人工智能导向的武器,也不会将人工智能用于违反国际准则的监控。它还承诺不部署使用人工智能会违反国际法或人权。

第八段

While the statement is vague, it represents one starting point. So does the idea that decisions made by AI systems should be explainable, transparent, and fair.

单词&词组

statement n. 声明,陈述

vague a. 模糊的,不明确的

transparent a. 透明的;显而易见的

本段翻译

虽然这一说法含糊其辞,但它代表了一个起点。人工智能系统所做的决策应该是可解释的、透明的和公平的。

第九段

To put it another way: How can we make sure that the thinking of intelligent machines reflects humanity’s highest values? Only then will they be useful servants and not Frankenstein’s out-of-control monster.

单词&词组

to put it another way 换句话说,换言之

value n. [pl]是非标准;生活准则;价值观

servant n. 仆人;供人们利用之物

本段翻译

换言之:我们如何确保智能机器的思维反映了人类的最高价值?只有这样他们才能成为有用的仆人,而不是弗兰肯斯坦失控的怪物。

五道题

31. Mary Shelley’s novel Frankenstein is mentioned because it____.

[A] fascinates AI scientists all over the world.

[B] has remained popular for as long as 200 years.

[C] involves some concerns raised by AI today.

[D] has sparked serious ethical controversies.

32. In David Eagleman’s opinion, our current knowledge of consciousness____.

[A] helps explain artificial intelligence.

[B] can be misleading to robot making.

[C] inspires popular sci-fi TV series.

[D] is too limited for us to reproduce it.

33. The solution to the ethical issues brought by autonomous vehicles____.

[A] can hardly ever be found.

[B] is still beyond our capacity.

[C] causes little public concern.

[D] has aroused much curiosity.

34. The author’s attitude toward Google’s pledge is one of____.

[A] affirmation.

[B] skepticism.

[C] contempt.

[D] respect.

35. Which of the following would be the best title for the text?

[A] AI’s Future: In the Hands of Tech Giants

[B] Frankenstein, the Novel Predicting the Age of AI

[C] The Conscience of AI: Complex But Inevitable

[D] AI Shall Be Killers Once Out of Control

参考答案

CDBAC

答案解析

31、细节题。通过题干可定位至文章第一段,在该段尾句,fiction that would foreshadow many ethical questions to be raised by technologies yet to come. 此句中 ethical questions可同义替换题干中的concern。故[C]为正选。

32、观点细节题。根据consciousness定位至第二段尾句部分:we are just in a situation where there are no good theories explaining what consciousness actually is and how you could ever build a machine to get there. 可知我们将意识注入机器的能力是有限的,因为没有好的理论可以解释。故选[D]。

33、细节题。根据题干定位至第三段,AI “vision”today is not nearly as sophisticated as that of humans. And to anticipate every imaginable driving situation is a difficult programming problem.该句说到:当下,人工智能的思维还不能达到人类的精密程度,去让机器去预设每一种驾驶情形是一个困难的编程问题。由此说明,去解决人工智能所带来的道德问题,是在人类的能力范围之外的。

34、态度题。根据题干定位到第八段,开头while转折,while the statement is vague, 与vague相反的即是观点,所以表示正向情感的词即是正解。affirmation表示认可。

35、主旨题。本文首段通过引用Mary Shelley的书引出本文要探讨的话题新技术所引发的道德问题。特别是在最后一段指出AI所涉及的道德问题已经触手可及。全篇复现了AI和conscience这两个关键词,所以选[C]。

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 单词 词组

- 本段翻译

- 参考答案

- 答案解析